Most of the ideas around the new OnWindowTransaction event handler in FileMaker 2023 are about using it for audit logging. So we won’t go there, but we will highlight two ideas on how this feature can be used almost immediately and to great effect.

The first one is using it to track deletes. The second one is more involved and centers around making data available for reporting, which will be covered in a later blog post.

Tracking deletes has always been difficult in FileMaker, and all audit log approaches had to jump through many hoops to try to include those actions. This new OnWindowTransaction feature makes it a breeze, except that there are some twists that made writing this article a very interesting journey of discovery.

OnWindowTransaction

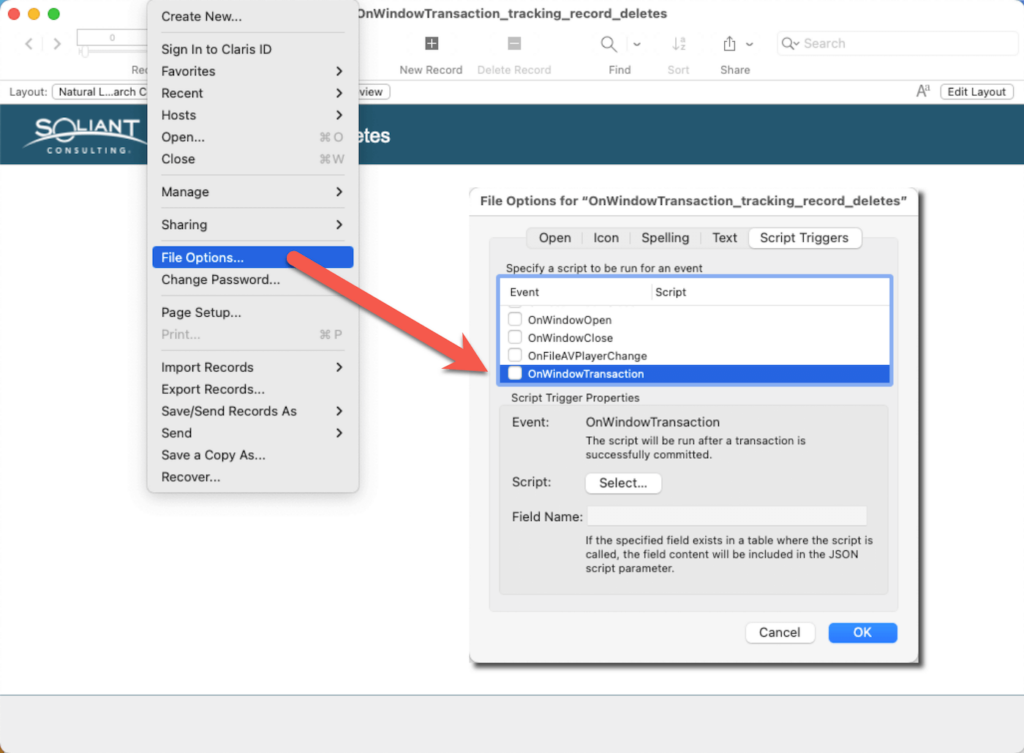

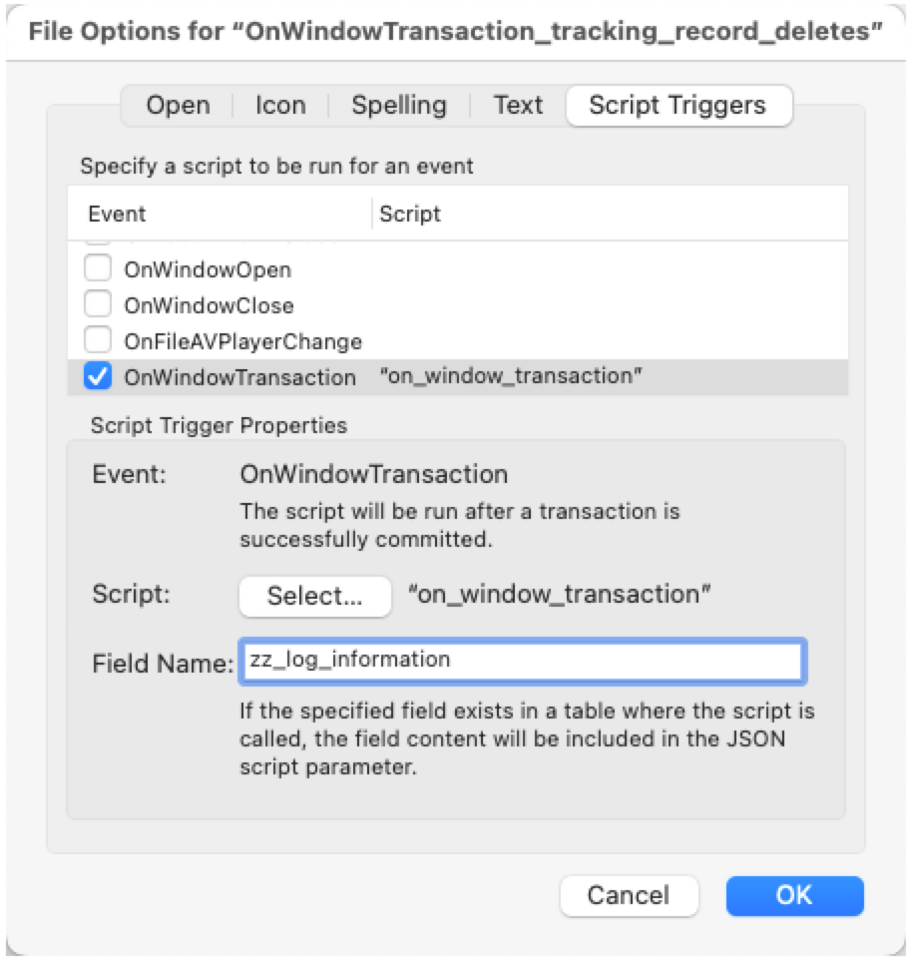

Here is a quick recap on this new feature: it is a file-based event trigger; you set it in the same place as you set the OnFirstWindowOpen triggers and its siblings:

When enabled, the script you assign to it will fire for every transaction in any window of the file. That means even for transactions involving data stored in tables in other files – as long as that transaction happens in a window of this file.

Do You Need to Use the Transaction Feature?

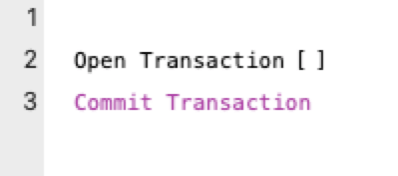

Just to be clear: using OnWindowTransaction does not mean that you need to use the 19.6 Transactions feature:

For the OnWindowTransaction feature, a “transaction” is any commit, whether it is explicit by using the Commit Record script step or implicit by navigating to another record or layout or by clicking outside an edited field. And this new feature covers record creation, changes to records, and record deletion.

Delete a Record

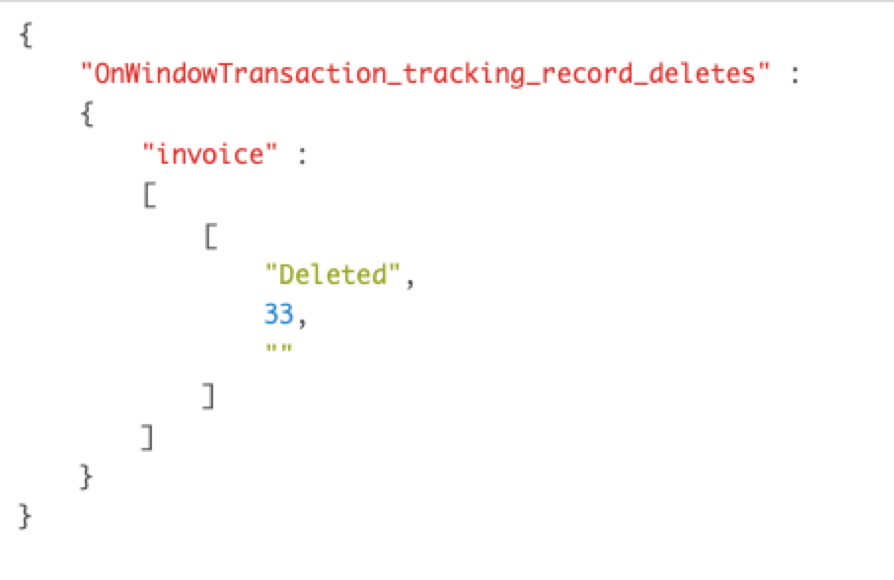

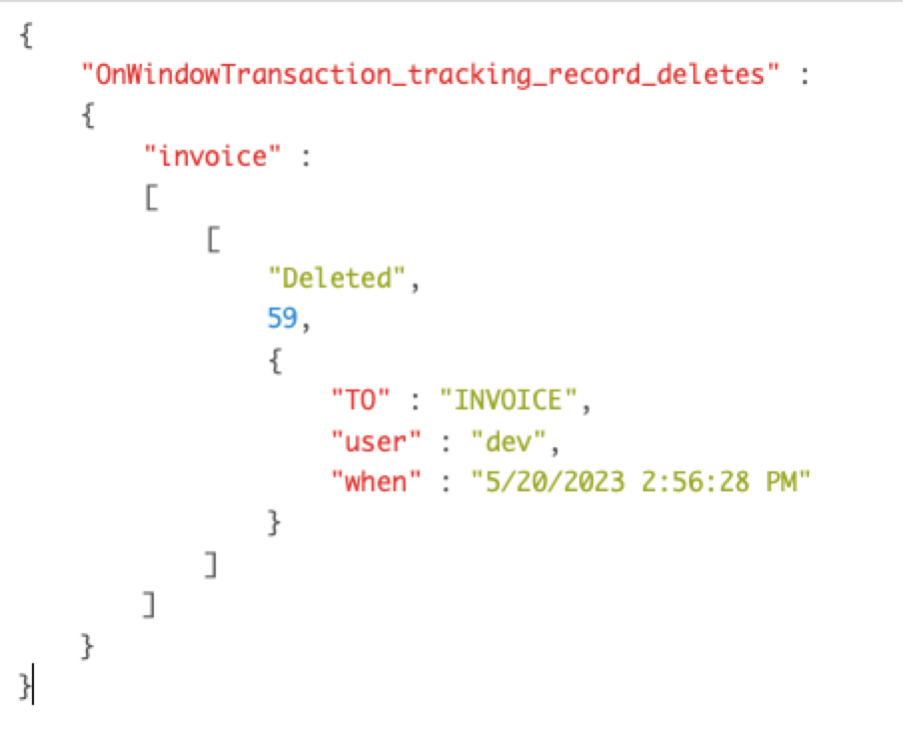

With the OnWindowTransaction trigger enabled in the File Options dialog, when you delete a record, FileMaker will capture some basic information in JSON format:

- The name of the file

- The name of the base table (i.e., Invoice)

- The action (Deleted)

- And the internal record id (which you can get from Get(RecordID))

This data may be sufficient for your needs. With it, you can certainly find the record in a backup in case you need to restore that record. But chances are you will also want to know additional information, such as what data was deleted, who deleted it, from what layout, etc.

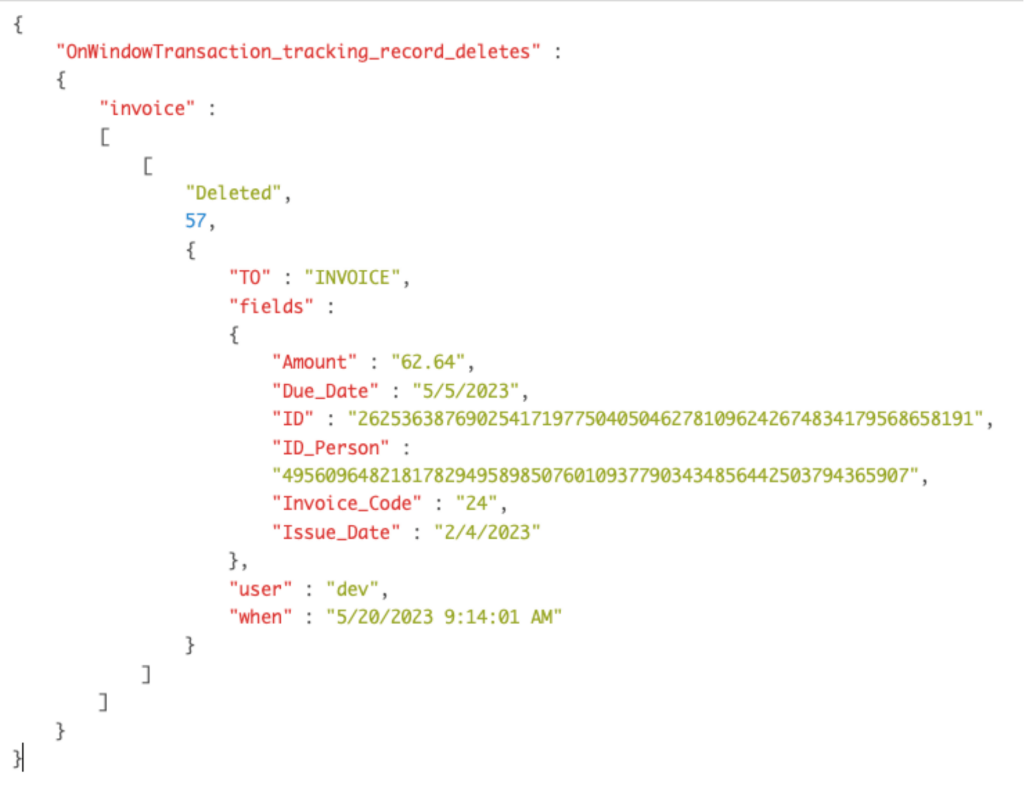

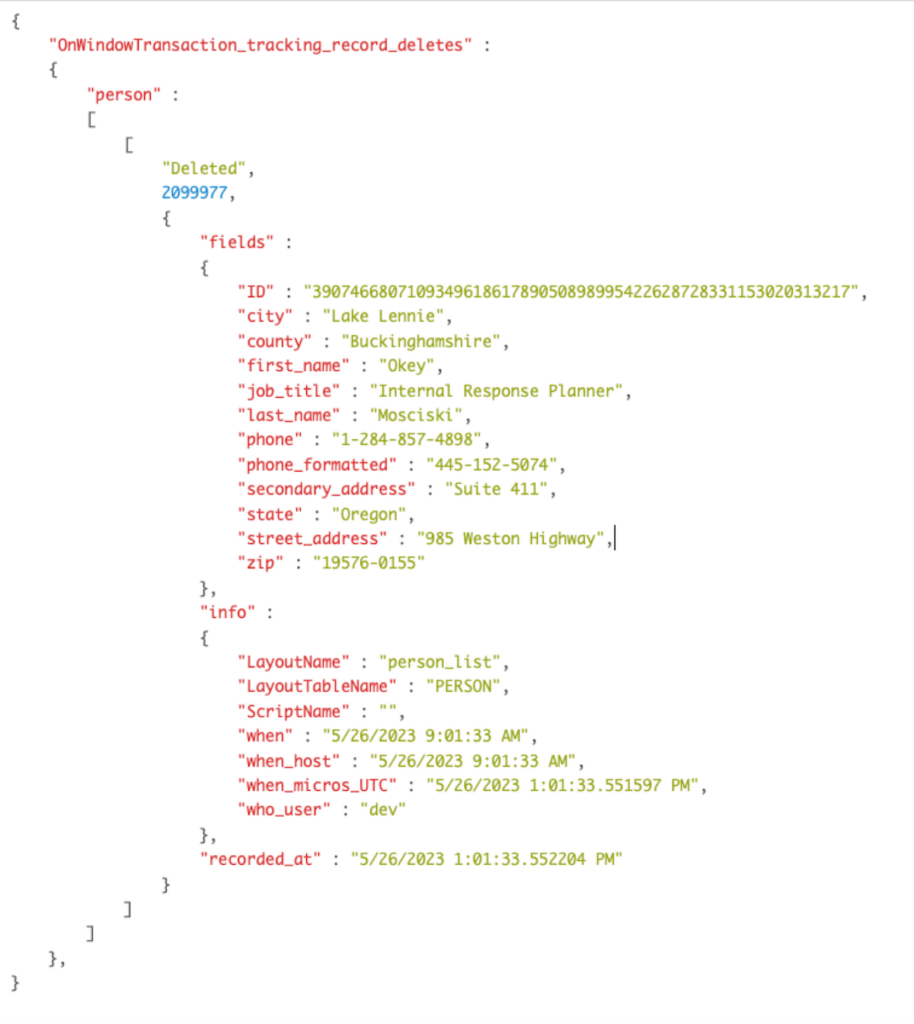

For example, when someone deletes an invoice record, you might want to see something like this:

This is easily achievable because the OnWindowTransaction feature allows you to add information to the JSON it produces. It automatically looks for a field named the same as the event (OnWindowTransaction) and in the tables where the data changes happened; it then adds the content from that field to the JSON that is sent to the trigger script.

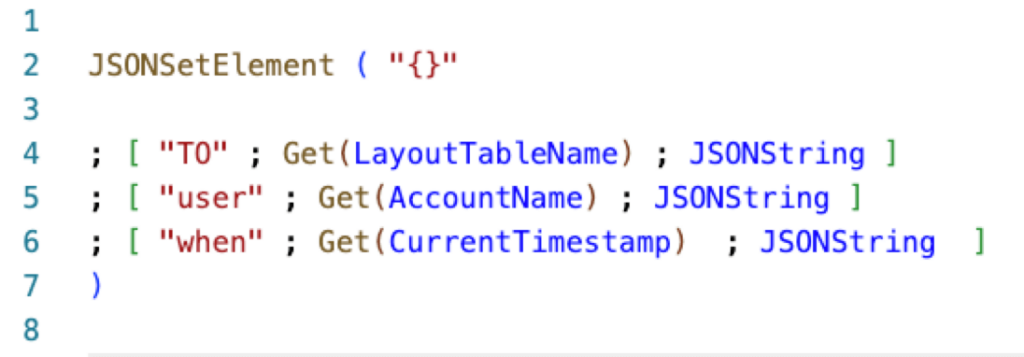

Adding data such as the table occurrence name, the delete timestamp, and the user is very straightforward when you make the zz_log_information field a calculation like this:

Then the logged information becomes:

Adding Field Data Statically

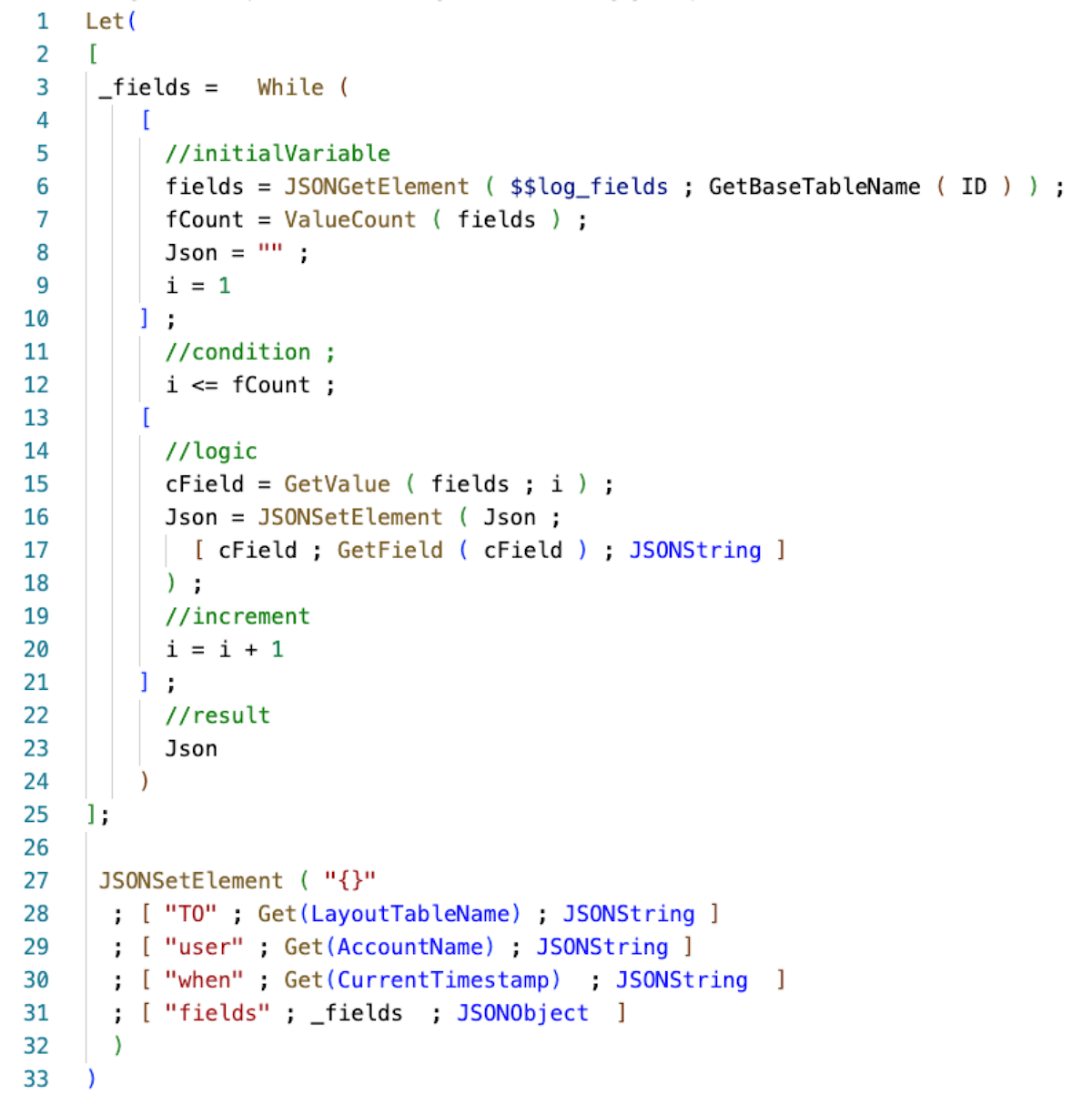

Adding the fields and their data is a little more work. You need to create a list of fields you want to include per table that you want to track. And then, your supplemental field calculation needs to iterate through that list and grab the field values.

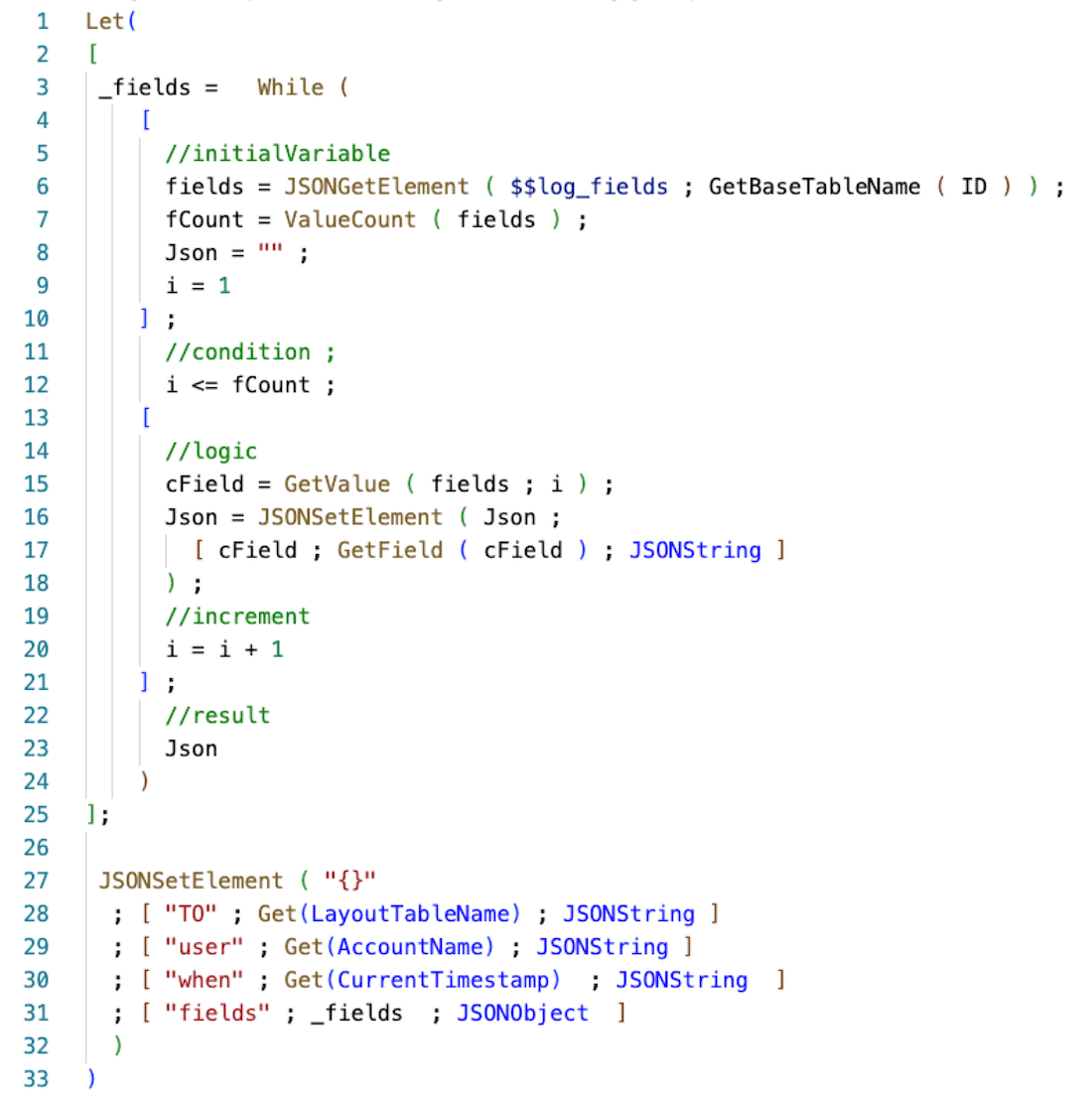

The calculation for that uses the While() function and looks like this:

The $$log_fields variable would then look like below. A JSON construct with each table name as the key and a return-delimited list (\r represents a return in JSON) with the fields for that table.

Adding Field Data Dynamically

Ideally, you will want to avoid hard-coding field names so that any field name changes do not break your logging.

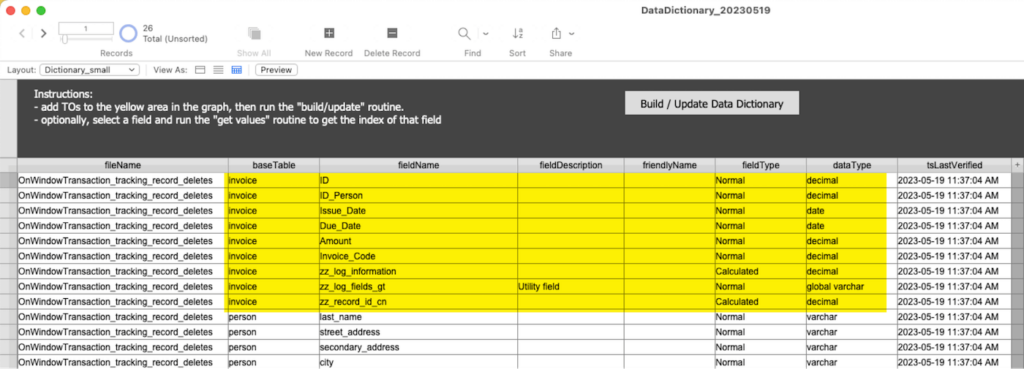

For this, you can use a data dictionary that uses the internal FileMaker meta tables (FileMaker_Fields, FileMaker_Tables…). Let’s look at the DataDictionary.fmp12 file to see how that works.

The invoice table has some fields that we likely want to exclude, such as global fields, calculation fields, and summary fields. The global fields are meaningless, and the summary and calc fields may be expensive in terms of performance for FileMaker to generate their values to add to the output.

Generally, you’ll want to grab all fields where the fieldType is Normal, and the datatype does not start with the word global. In the demo file, the script named gather_fields does that and stores the result as a JSON object in a global variable. The script runs as part of the OnFirstWindowOpen event, so that we only have to do this once.

Then, the calculation for zz_log_information uses a While() function to extract the list of fields for the affected basetable and loops through each of these fields, using GetField() to retrieve its value. On line 31 of the script below, the result is added to the JSON you already had.

That is how you can record more useful information about the deleted record.

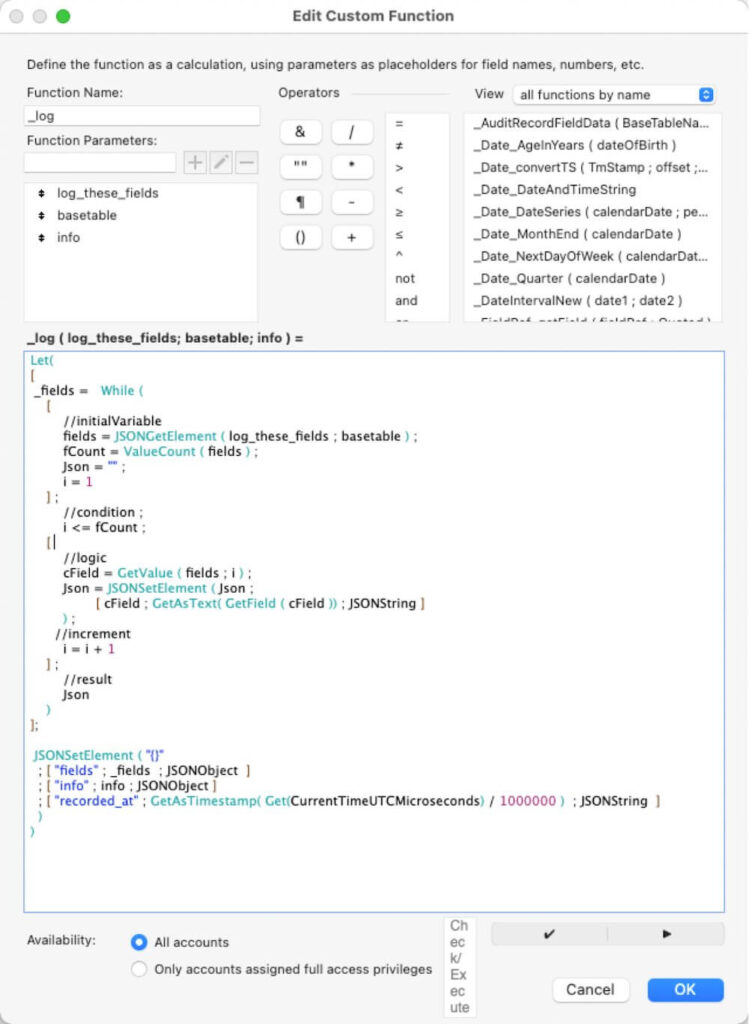

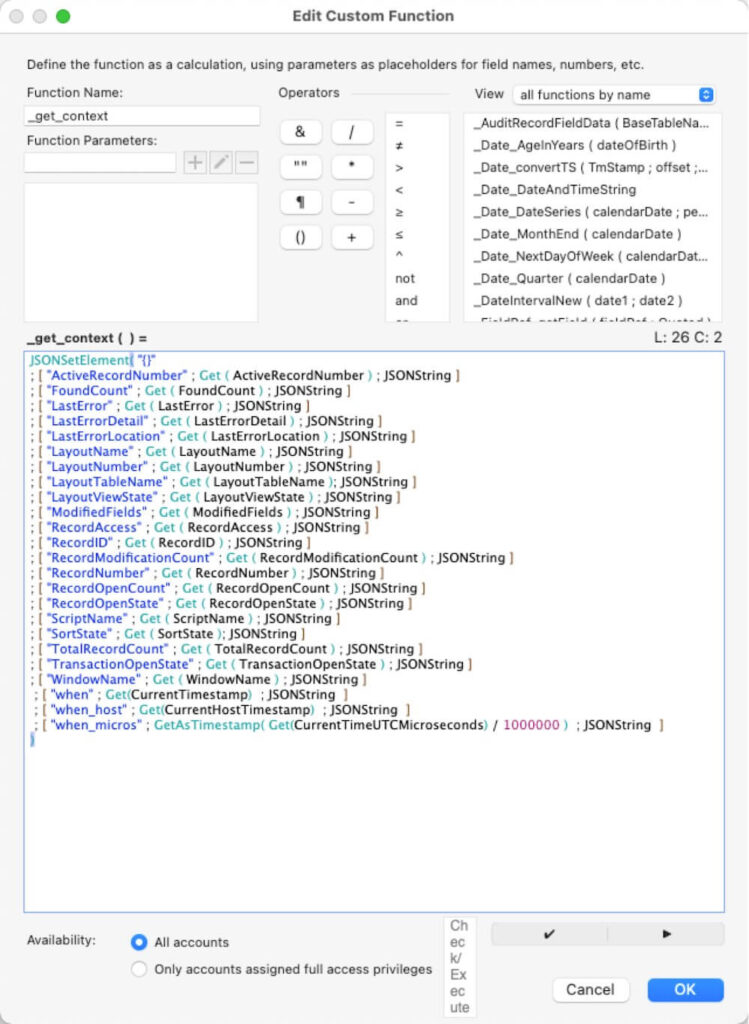

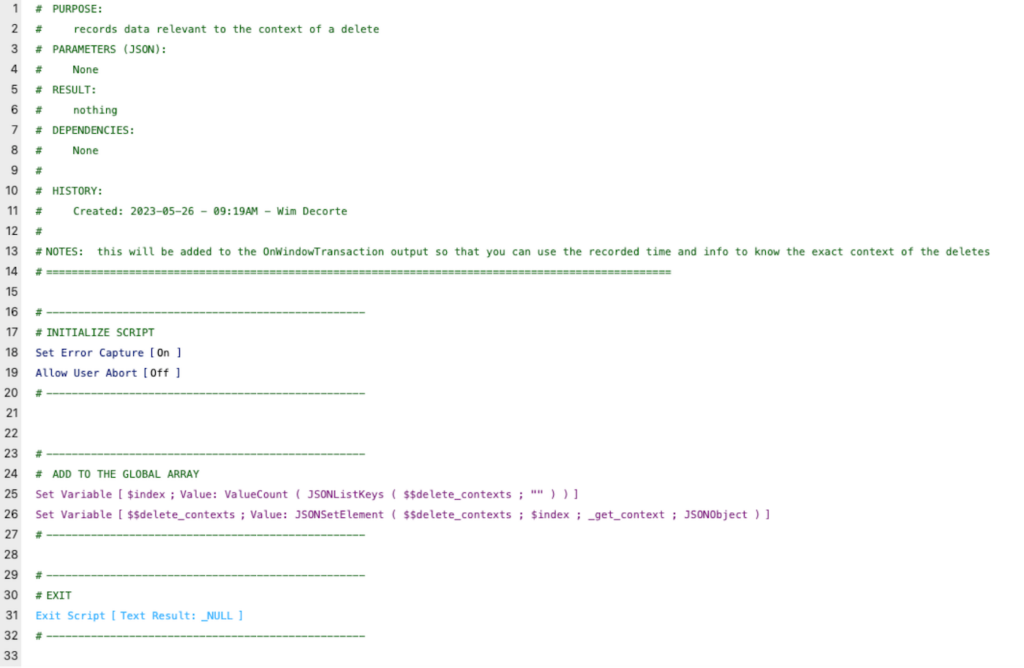

Because you will find yourself needing to create this calculation in every table, you can abstract it a little more by making it a Custom Function. In the demo file, you will find one named _log and another one named _get_context. The _get_context custom function collects all the Get() functions that you deem important. The _log custom function receives the JSON with the list of fields you want to log (which is in our $$log_fields variable collected as part of the OnOpen script) and the name of the basetable affected by the operation. You can use the info parameter to add any additional data that you want, for instance, the output from the _get_context function.

Logging the Output

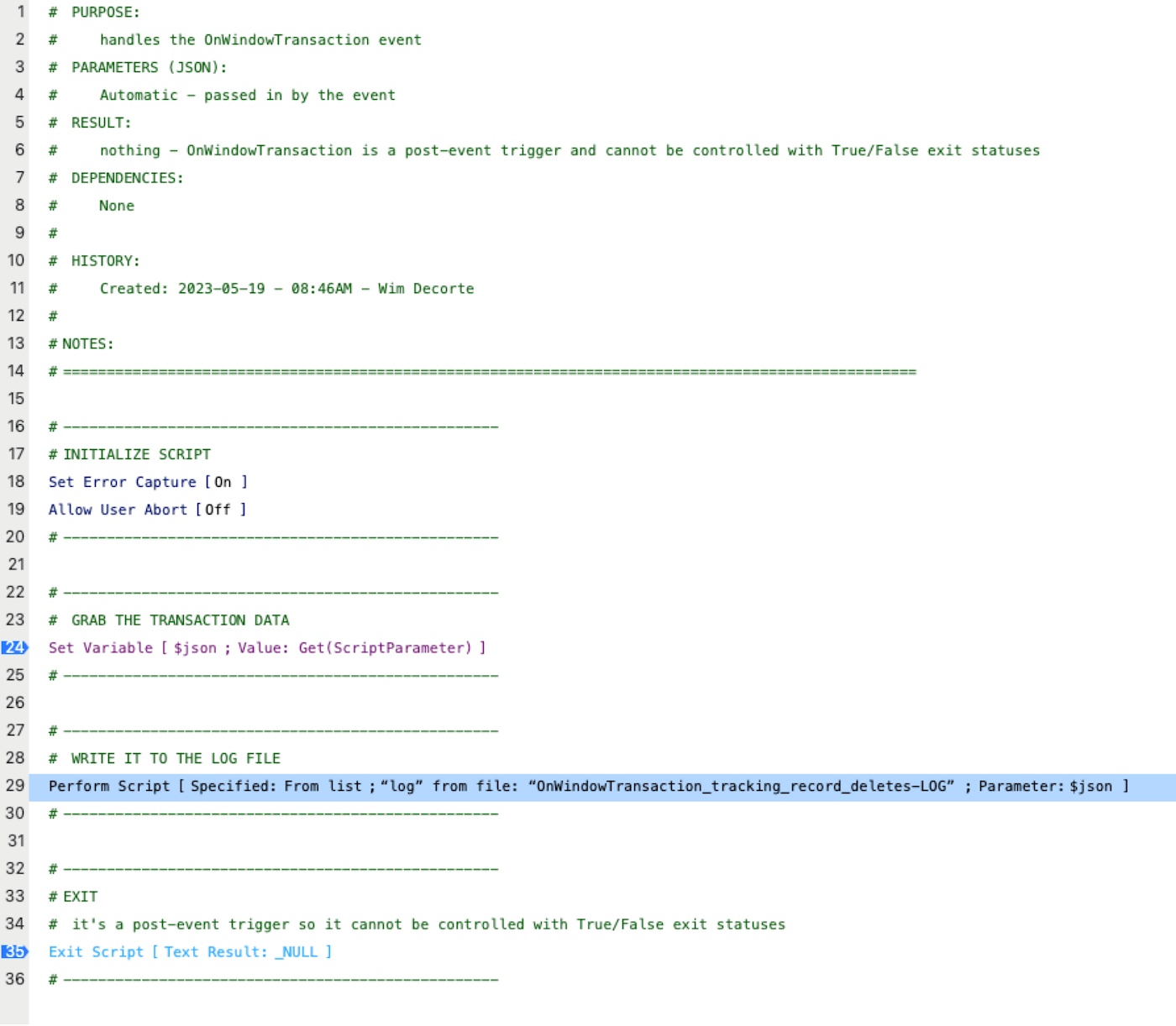

The script that handles the event receives the JSON produced by OnWindowTransaction, and in the demo file, it is passed on to a script in another file to create an actual log record:

Recording Only Deletes

At this point, all data changes will be logged, not only the deletes. If you want only the deletes, you will need to inspect the JSON to check what it contains and remove the actions that are not deletes.

The OnWindowTransaction script in the demo file does that.

Single Context Deletes

When a user manually deletes a record or if a script deletes a record, then your log file will receive the JSON that describes the event and log all the useful information you need. So far, so good. It’s always a single operation that gets logged immediately.

If you delete records in batches, then note that:

- The Delete All Records and Delete Found Records will create one or more log entries depending on the size of the found set. FileMaker commits these bulk operations in batches.

- The Truncate Table script step does NOT trigger the OnWindowTransaction event, so nothing is logged for that operation.

- Cascading deletes behave like a transaction-style delete, the logged information will contain information about all the deleted records in all affected tables, so do read on because it may not the data you expect…

Transaction-style Deletes

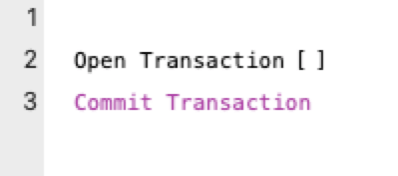

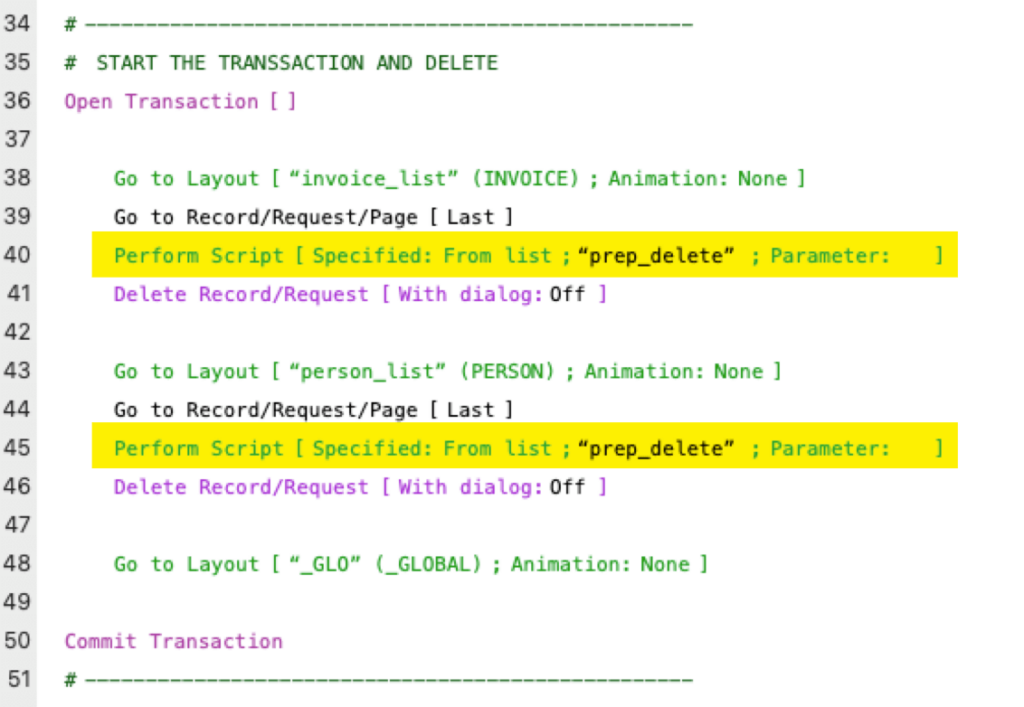

What happens when you do use 19.6-style Transactions and a record delete happens inside the Open Transaction / Commit Transaction construct?

In this scenario, there is some complexity around the timing of when the supplemental data is generated.

The zz_log_information field that is used to supply the supplemental information is an unstored calculation. That is because we want to be certain that it evaluates when FileMaker collects the information to log, which is the moment that the commit happens (explicitly or otherwise). For non-transactional operations, changing contexts by going to another record or another layout will first force a commit. The supplemental information’s Get() functions will always be able to record the information at that exact moment, and the info will accurately reflect where the user is at that moment in time.

When you use transactions, then that commit happens only at the end of the construct:

And before it gets to that Commit Transaction, a transaction can have any number of operations on different contexts. You can even switch layouts and records during a transaction without triggering a commit.

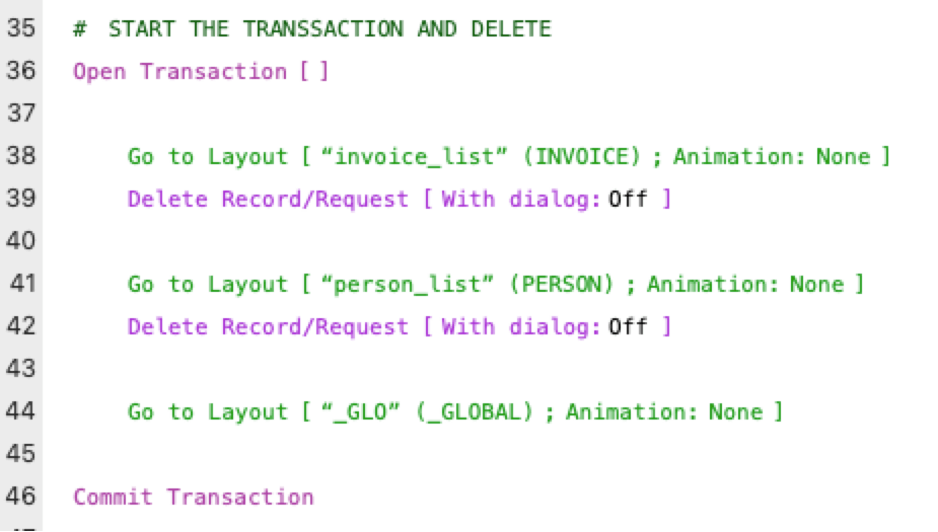

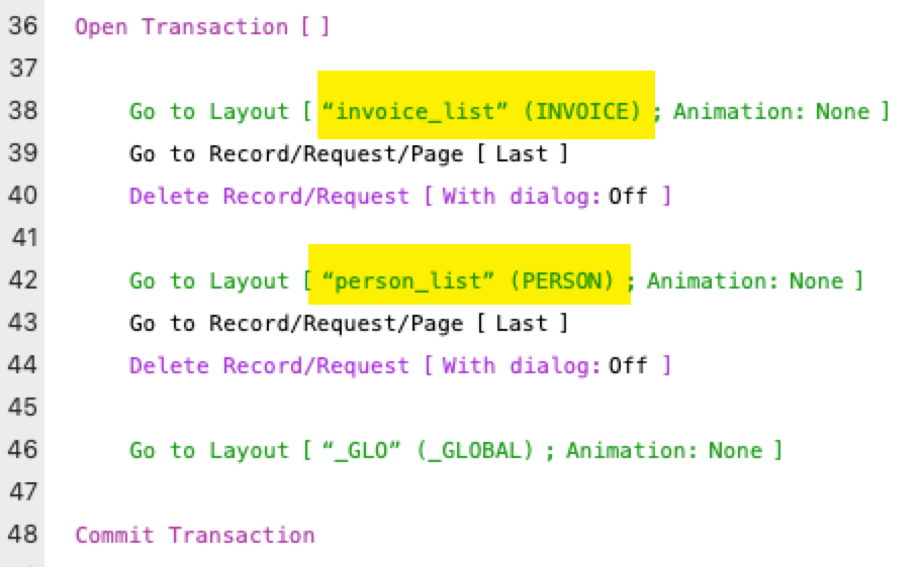

Then, when the transaction ends with Commit Transaction, the unstored calculations only know of the context at that exact moment. For example, if a transaction does this:

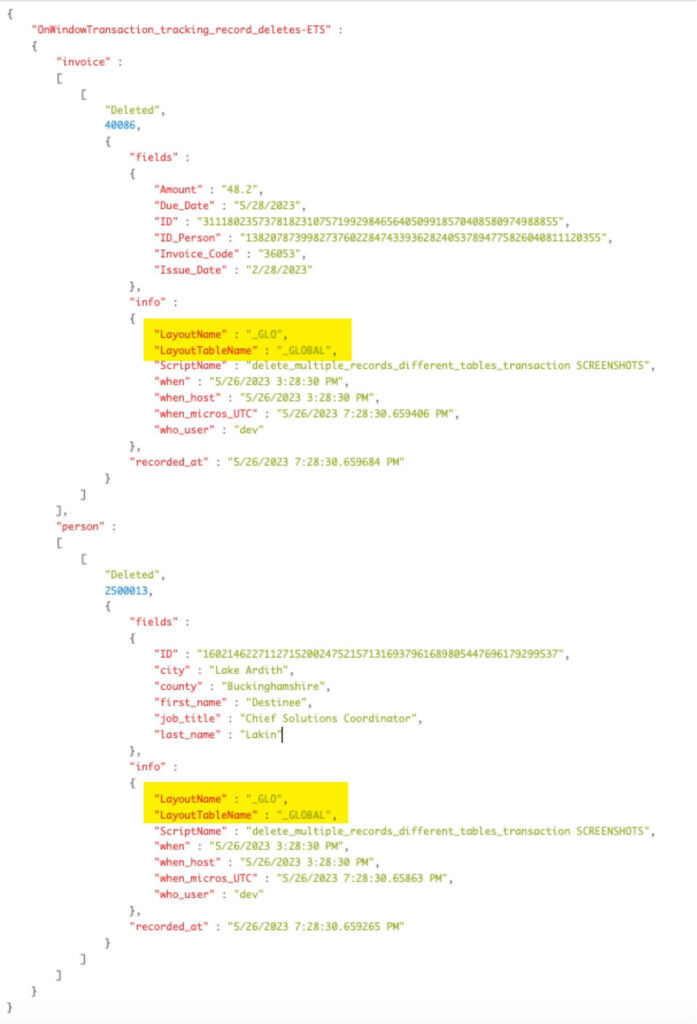

Then the resulting JSON from OnWindowTransaction will show _GLO as the layout name and _GLOBAL as the table occurrence. The Get() functions get called on line 46, and at that moment, FileMaker is on the _GLO layout, and that is what the unstored calculations in each table will pick up on.

You can change the supplemental field calc to be stored instead of unstored, but that doesn’t solve the problem either. A stored calculation’s value is set at the time the record is created or when any of its dependencies are updated (the fields it references). But, deleting a record does not change any record content, so the stored field does not get updated.

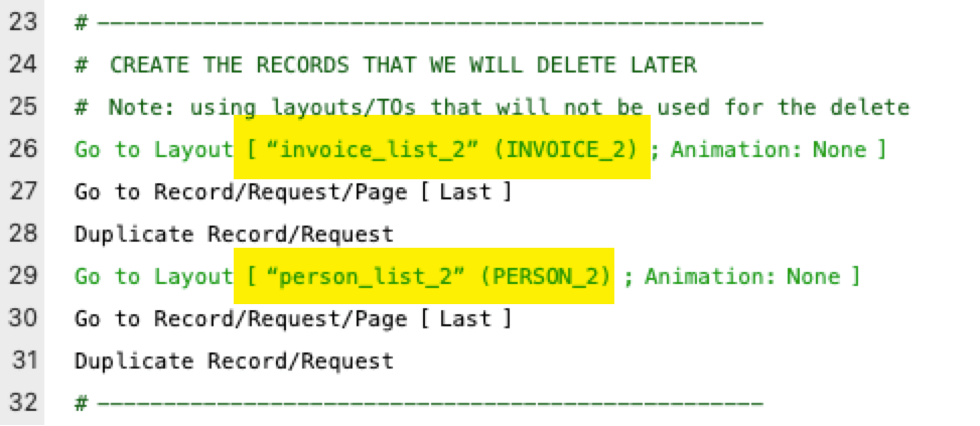

When, for instance, you create two records in a totally different context than what you use to delete the records, as shown in the two screenshots:

Then the supplemental information provided by the stored calculation will reflect information collected at the time of creation, not the time of deletion:

If a transaction does not change contexts (goes to another layout or record), then the recorded data will all be accurate. But if your transaction does deletes from different places, then neither a stored nor an unstored supplemental calc field will give you the right information for the layout and TO names.

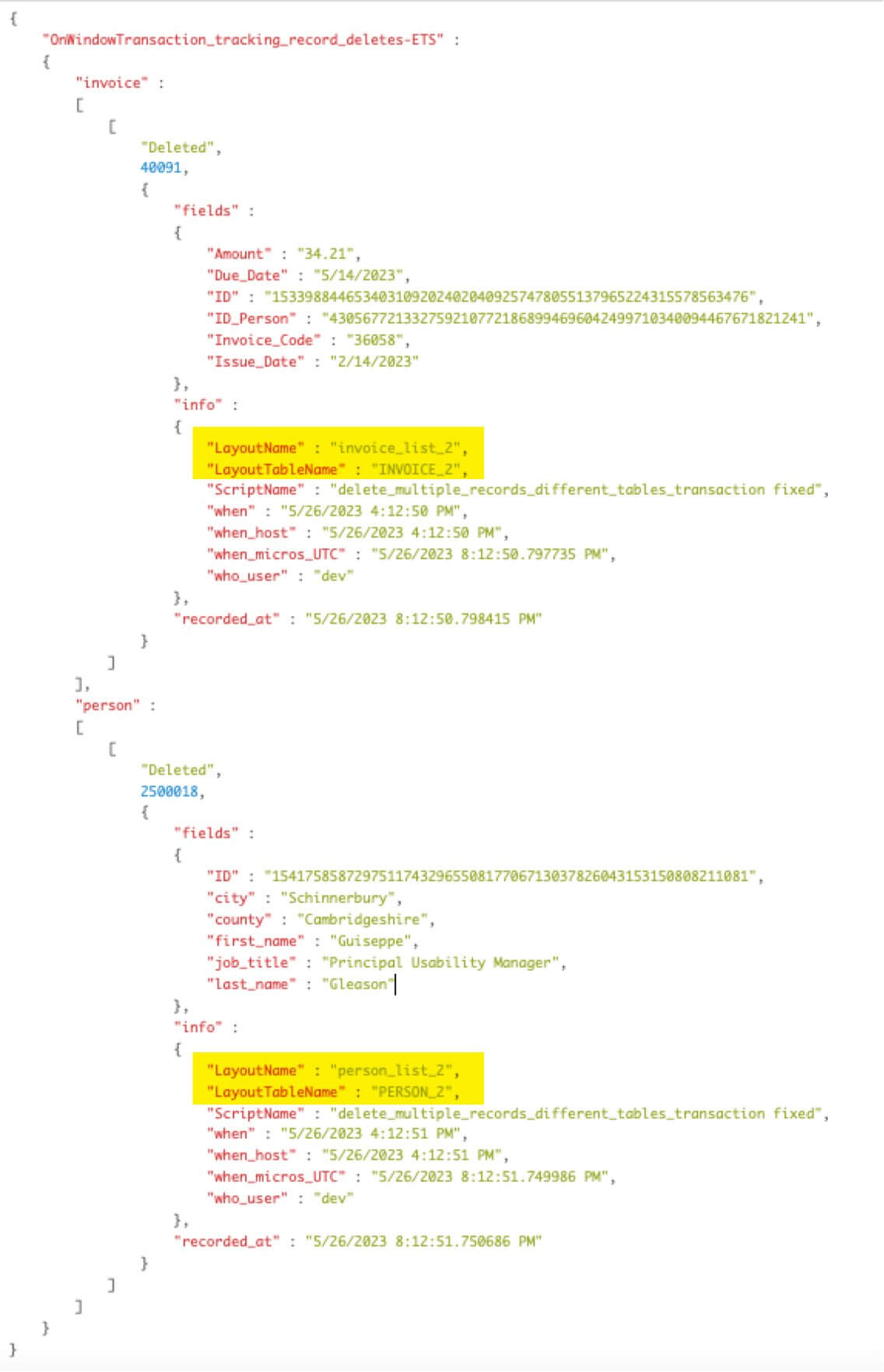

One way to solve this is to add your own data collection and then add it to the JSON produced by FileMaker in the event handler script.

Line 26 in this script calls the custom function to collect all relevant Get() functions and adds it to a JSON array named $$delete_contexts (we’re using an array because a script could do multiple deletes from multiple contexts when using 19.6-style transactions):

Then in the transaction script, you can call this prep script just before the delete actions:

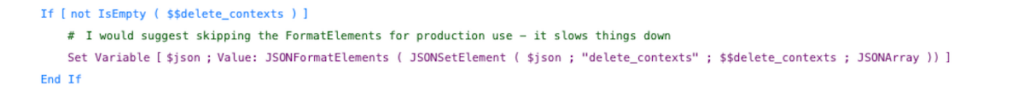

And in the event handler script, you include a section that adds this array to the JSON that is produced by the OnWindowTransaction event before it is sent off to the logging script:

Closing Thoughts on Performance Impact

The more complex you make the supplemental calculation field, the longer it will take to process each commit.

Similarly, the more complex you make the script that handles the JSON, the longer each commit action will take.

In the demo example, with the most complex example of deleting multiple records in a transaction, from the start of the transaction to the record being logged, adds just shy of 8 milliseconds to the commit. Since that includes recording the context and inspecting the JSON to record only the deletes and then writing out the log record, that is pretty fast and is small enough that for most single operations, the added time is irrelevant.

But if you were to loop through 1000 records and delete each one, then that would add 8 seconds to your total operation. In this case, it would be faster to do a 19.6 style transaction or produce a found set to delete all at once instead of doing many single operations.

Leveraging Tracking Record Deletes in FileMaker 2023

Collecting information about record deletes is now extremely easy with the OnWindowTransaction event. Especially anything that happens as a single event, such as a user manually deleting a record or a scripted flow that does not use 19.6-style transactions.

There is some complexity to those 19.6-style transactions if your transactions contain deletes and changes context before the transaction gets committed.

Deletion logging is fast enough and useful enough that we recommend including it as a standard feature in any FileMaker database. It is as easy to add to a legacy system as it is to a new system.